Python Transformation Example Scripts

The script below are example scripts that users can use as reference to implement their own script for many different algorithms.

Algorithm Name

|

Script |

|---|---|

|

In the example script below, the Python Transformation pipeline step is defined to run PARC (PARC: ultrafast and accurate clustering of phenotypic data of millions of single cells; Shobana V Stassen; Bioinformatics, Volume 36, Issue 9, 1 May 2020).

Note: to run this experiment, Python, pandas and parc should be properly installed on the user computer.

#This script allows you to run PARC as a Python Transformation pipeline step in FCS Express 7) try: #Function to check whether an object is float

|

|

In the example script below, the Python Transformation pipeline step is defined to run TriMap (TriMap: Large-scale Dimensionality Reduction Using Triplets; Ehsan Amid, Manfred K. Warmuth; arXiv:1910.00204; submitted on 1 Oct 2019).

Note: to run this experiment, Python, pandas and trimap should be properly installed on the user computer.

# Trimap from FcsExpress import * try: from pandas import DataFrame except ModuleNotFoundError: raise RuntimeError("Current Python distribution does not contain Pandas. " + "To test this script please install pandas: " + "(https://pandas.pydata.org/pandas-docs/stable/getting_started/install.html)")

try: import trimap except ModuleNotFoundError: raise RuntimeError("Current Python distribution does not contain trimap. " + "To test this script please install trimap: " + "(https://pypi.org/project/trimap/)")

parameter_map = { "n_inliers": "Number Nearest Neighbors", "n_outliers": "Number Outliers", "n_random": "Number Random Triplets", "distance": "Distance Measure", "weight_adj": "Gamma", "lr": "Learning Rate", "n_iters": "Number Iterations" }

def RegisterOptions(params): RegisterIntegerOption(parameter_map["n_inliers"], 10) RegisterIntegerOption(parameter_map["n_outliers"], 5) RegisterIntegerOption(parameter_map["n_random"], 5) RegisterStringOption(parameter_map["distance"], "euclidean") RegisterFloatOption(parameter_map["weight_adj"], 500.0) RegisterFloatOption(parameter_map["lr"], 1000.0) RegisterIntegerOption(parameter_map["n_iters"], 400)

def RegisterParameters(opts, params): RegisterParameter("Trimap 1") RegisterParameter("Trimap 2")

def Execute(opts, data, res): df = DataFrame(data) embedding = trimap.TRIMAP( n_inliers=opts[parameter_map["n_inliers"]], n_outliers=opts[parameter_map["n_outliers"]], n_random=opts[parameter_map["n_random"]], distance=opts[parameter_map["distance"]], weight_adj=opts[parameter_map["weight_adj"]], lr=opts[parameter_map["lr"]], n_iters=opts[parameter_map["n_iters"]] ).fit_transform(df.to_numpy()) res["Trimap 1"] = embedding[:, 0] res["Trimap 2"] = embedding[:, 1] return res

|

|

In this example script, the Python Transformation pipeline step is defined to run HDBSCAN (Campello R.J.G.B., Moulavi D., Sander J. (2013) Density-Based Clustering Based on Hierarchical Density Estimates. In: Pei J., Tseng V.S., Cao L., Motoda H., Xu G. (eds) Advances in Knowledge Discovery and Data Mining. PAKDD 2013. Lecture Notes in Computer Science, vol 7819. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-37456-2_14).

HDBSCAN can be useful to identify clusters on UMAP plots. When HDBSCA is used for this purpose, creating a UMAP map with dense clusters (i.e. keeping the UMAP "Min Low Dim Distance" value low) will help HDBSCAN clustering. More information on DBSCAN for Python can be found here.

Note #1: to run this experiment, Python, pandas, and hdbscan should be properly installed on the user computer.

Note #2: If noisy data points are detected by HDBSCA, they will be grouped under "HDBSCAN Cluster Assignment" equal to "1" in FCS Express.

Note #3: with large dataset, HDBSCAN might return a "Could not find -c" error. If that happens, setting core_dist_n_jobs to 1 might solve the issue.

#HDBSCAN #See if doing default min_samples print("Running HDBSCAN on {} data points, with min_cluster_size={}, min_samples={} and core_dist_n_jobs={}".format(NumberDataPoints,opts["min_cluster_size"],min_samples,opts["core_dist_n_jobs"])) clusterer = hdbscan.HDBSCAN(min_cluster_size=opts["min_cluster_size"], #So, in case noisy data points are present, cluster labels are increased by 1 if min(cluster_labels)==-1:

|

|

In this example script, the Python Transformation pipeline step is defined to run Fit-SNE (Fast interpolation-based t-SNE for improved visualization of single-cell RNA-seq data; Linderman, G.C., Rachh, M., Hoskins, J.G. et al.; Nat Methods 16, 243–245 (2019). https://doi.org/10.1038/s41592-018-0308-4) through the openTSNE Python implementation. Note: to run this experiment, Python, pandas and openTSNE should be properly installed on the user computer. #This script allow to run openTSNE as a Python Transformation pipeline step in FCS Express 7

|

|

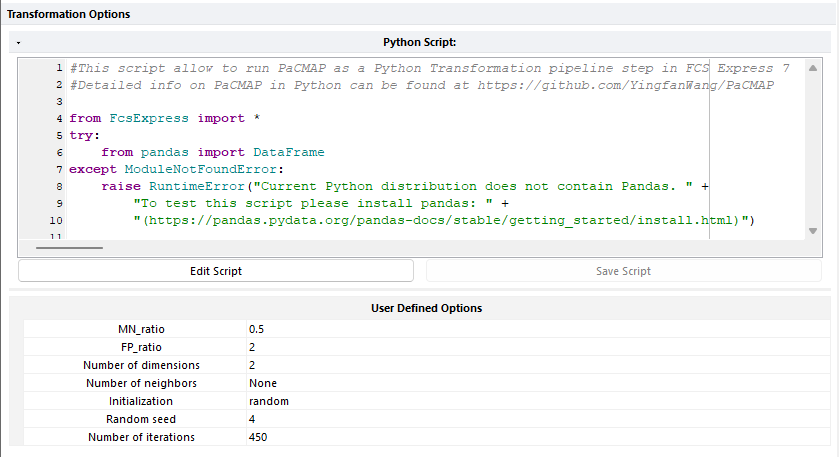

In this example script, the Python Transformation pipeline step is defined to run PaCMAP (Wang and Huang, at al., Understanding How Dimension Reduction Tools Work: An Empirical Approach to Deciphering t-SNE, UMAP, TriMap, and PaCMAP for Data Visualization; Journal of Machine Learning Research 22 (2021) 1-73). More information on PaCMAP for Python can be found here.

Note: to run this experiment, Python, pandas, and pacmap should be properly installed on the user computer.

#This script allow to run PaCMAP as a Python Transformation pipeline step in FCS Express 7 #Detailed info on PaCMAP in Python can be found at https://github.com/YingfanWang/PaCMAP

from FcsExpress import * try: from pandas import DataFrame except ModuleNotFoundError: raise RuntimeError("Current Python distribution does not contain Pandas. " + "To test this script please install pandas: " + "(https://pandas.pydata.org/pandas-docs/stable/getting_started/install.html)")

try: import pacmap except ModuleNotFoundError: raise RuntimeError("Current Python distribution does not contain Pacmap. " + "To test this script please install pacmap: " + "(https://github.com/YingfanWang/PaCMAP)")

def RegisterOptions(params): RegisterIntegerOption("Number of dimensions", 2) RegisterStringOption("Number of neighbors", "None") RegisterFloatOption("MN_ratio", 0.5) RegisterFloatOption("FP_ratio", 2.0) RegisterStringOption("Initialization","pca") RegisterIntegerOption("Random seed", 4) RegisterIntegerOption("Number of iterations", 450)

def RegisterParameters(opts, params): for dim in range(opts["Number of dimensions"]): RegisterParameter("PaCMAP {}".format(dim + 1))

def Execute(opts, data, res):

#Reading data df = DataFrame(data) X = df.to_numpy()

#See if doing default n_neighbors if opts["Number of neighbors"] == "None": n_neighbors = None # Setting n_neighbors to "None" leads to a default choice elif opts["Number of neighbors"].isnumeric(): n_neighbors = int(opts["Number of neighbors"]) else: raise Exception("The Number of neighbors option only accept None or integer values")

#Check initialization Initializations=["pca","random"] if opts["Initialization"] not in Initializations: raise Exception("The Initialization option only accept the following values: pca, random")

#Initializing the pacmap instance embedding = pacmap.PaCMAP( n_components=opts["Number of dimensions"], n_neighbors=n_neighbors, MN_ratio=opts["MN_ratio"], FP_ratio=opts["FP_ratio"], random_state=opts["Random seed"], num_iters=opts["Number of iterations"], verbose=True)

#Running PaCMAP X_transformed = embedding.fit_transform(X, init=opts["Initialization"])

print("Running PaCMAP with {} Initialization on {} data points, with Number of Neighbors set to {}".format(opts["Initialization"], NumberDataPoints, n_neighbors))

#Loop over dimensions and set results for dim in range(opts["Number of dimensions"]): res["PaCMAP {}".format(dim + 1)] = X_transformed[:, dim]

return res

|

|

In this example script, the Python Transformation pipeline step is defined to run Ivis (Szubert, B., Cole, J.E., Monaco, C. et al. Structure-preserving visualisation of high dimensional single-cell datasets. Sci Rep 9,8914 (2019). https://doi.org/10.1038/s41598-019-45301-0). More information on Ivis for Python can be found here and here.

Note: to run this script, Python, pandas and ivis should be properly installed on the user computer.

#This script allows you to run Ivis as a Python Transformation pipeline step in FCS Express 7 #Detailed info on Ivis in Python can be found at https://bering-ivis.readthedocs.io/en/latest/python_package.html

from FcsExpress import *

try: from pandas import DataFrame except ModuleNotFoundError: raise RuntimeError("Current Python distribution does not contain Pandas. " + "To test this script please install pandas: " + "(https://pandas.pydata.org/pandas-docs/stable/getting_started/install.html)")

try: from ivis import Ivis except ModuleNotFoundError: raise RuntimeError("Current Python distribution does not contain ivis. " + "To test this script please install ivis: " + "(https://github.com/beringresearch/ivis)")

try: from scipy import sparse except ModuleNotFoundError: raise RuntimeError("Current Python distribution does not contain scipy. " + "To test this script please install scypy: " + "(https://scipy.org/)")

def RegisterOptions(params): RegisterIntegerOption("Embedding Dimensions",2) RegisterIntegerOption("Number of Nearest Neighbours",15) RegisterIntegerOption("Number of epochs without progress",10) RegisterStringOption("Type of input", "numpy array") RegisterStringOption("Keras Model", "auto")

#The Number of Nearest Neighbours , the Number of epochs without progress #and the Keras Model are tunable parameters that should be selected #on the basis of dataset size and complexity. #Please refer to https://bering-ivis.readthedocs.io/en/latest/hyperparameters.html

def RegisterParameters(opts, params): for dim in range(opts["Embedding Dimensions"]): RegisterParameter("Ivis {}".format(dim + 1))

def Execute(opts, data, res): df = DataFrame(data) #Converting the input data to either numpy array or spare matrix based on the user selection if opts["Type of input"]=="numpy array": X=df.to_numpy() elif opts["Type of input"]=="sparse matrix": X=sparse.csr_matrix(df) else: raise Exception("The Type of input option only accept the following values: numpy array, sparse matrix")

# Determine model. For more details, see: # https://bering-ivis.readthedocs.io/en/latest/hyperparameters.html models = ["maaten","szubert","hinton"] if opts["Keras Model"]=="auto": if NumberDataPoints > 500000: keras_model = "szubert" else: keras_model = "maaten" elif opts["Keras Model"] in models: keras_model = opts["Keras Model"] else: raise Exception("The Model option only accept the following values: auto, maaten, szubert, hinton")

print("Running Ivis with keras model {} on {} data points, using {} as input data type".format(keras_model, NumberDataPoints,opts["Type of input"]))

# Set ivis parameters model = Ivis(embedding_dims = opts["Embedding Dimensions"], k = opts["Number of Nearest Neighbours"], n_epochs_without_progress = opts["Number of epochs without progress"], model = keras_model ) embeddings = model.fit_transform(X) # Generate embeddings #Loop over dimensions and set results for dim in range(opts["Embedding Dimensions"]): res["Ivis {}".format(dim + 1)] = embeddings[:, dim]

return res #return the res object that will be re-imported into FCS Express

|

|

In this example script, the Python Transformation pipeline step is defined to run Phate (Moon, K.R., van Dijk, D., Wang, Z. et al. Visualizing structure and transitions in high-dimensional biological data. Nat Biotechnol 37, 1482–1492 (2019). https://doi.org/10.1038/s41587-019-0336-3). More information on Phate for Python can be found here. Note: to run this script, Python, pandas and phate should be properly installed on the user computer. #This script allows you to run Phate as a Python Transformation pipeline step in FCS Express 7. #Detailed info on Phate in Python can be found at https://pypi.org/project/phate/ from FcsExpress import * try: from pandas import DataFrame except ModuleNotFoundError: raise RuntimeError("Current Python distribution does not contain Pandas. " + "To test this script please install pandas: " + "(https://pandas.pydata.org/pandas-docs/stable/getting_started/install.html)") try: import phate except ModuleNotFoundError: raise RuntimeError("Current Python distribution does not contain phate. " + "To test this script please install phate: " + "(https://pypi.org/project/phate/)") parameter_map = { "knn": "Number Nearest Neighbors", "n_components": "Number of dimensions" } def RegisterOptions(params): #The full list of available parameters can be found at #https://phate.readthedocs.io/en/stable/api.html RegisterIntegerOption(parameter_map["knn"], 5) RegisterIntegerOption(parameter_map["n_components"], 2) def RegisterParameters(opts, params): for dim in range(opts[parameter_map["n_components"]]): RegisterParameter("Phate {}".format(dim + 1)) def Execute(opts, data, res): df = DataFrame(data) phate_operator = phate.PHATE( knn=opts[parameter_map["knn"]], n_components=opts[parameter_map["n_components"]] ) df_phate = phate_operator.fit_transform(df) #Loop over dimensions and set results for dim in range(opts[parameter_map["n_components"]]): res["Phate {}".format(dim + 1)] = df_phate[:, dim] return res |